Gibbs sampling

Abstract

This article provides the recipes, simple Python codes and mathematical proof to the most basic form of Gibbs sampling.

What is Gibbs sampling?

Gibbs sampling is a method to generate samples from a multivariant distribution using only conditional distributions , and so on. It is used when the original distribution is hard to calculate but the conditional distributions are available.

How does it work?

Procedure

Starting with a sample , to generate a new sample , do the following

- Sample from .

- Sample from .

- Sample from

- … and so on. The last term is sampling from .

- Now we have the new sample . Repeat for the next sample.

Note that the value of a new sample depends only on the the previous one, and not the one before, Gibbs sampling belongs to a class of methods called Markov Chain Monte Carlo (MCMC).

Example

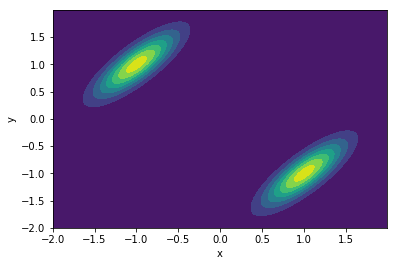

Consider a mixture of two 2-dimensional normal distribution below with joint distribution .

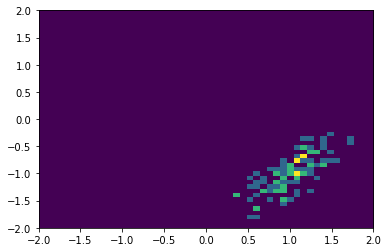

We can use the procedure above to to generate samples using only the conditional distribution and . The sample chain could initially be trapped in one local distribution:

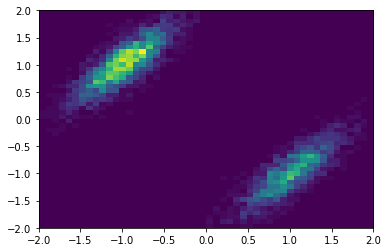

But if enough number of points are sampled, the original distribution is recovered:

The codes that generate this example can be found here.

Why does it work?

This section outlines the proof of Gibbs sampling. I mostly follow MIT 14.384 Time Series Analysis, Lectures 25 and 26.

Markov Chain Monte Carlo (MCMC)

Gibbs sampling generates a Markov chain. That’s obvious because the series , , … depends only on the previous value. An important concept to understand is the transition Probability , the probability of generating sample from the previous sample .

Suppose we want to sample a distribution , we want to generate samples in a way that the new sample still follows distribution . Mathematically, for transition probability , the probability measure is invariant if

and the transition probability is

- irreducible (i.e. any point can reach any other points)

- aperiodic

The MCMC problem is to find a transition probability such that is invariant.

Proof

The procedure of Gibbs sampling outlined previously can be written as a transition probability

Let be the distribution we want to sample from. We need to show is invariant under this transition, i.e.

In other words, if we follow to get new sample based on the previous sample , and repeat this process over and over again, we stay in the same distribution .

Since we need to integrate over , the first thing is to rewrite using Bayes rule so that there’s no conditional on . Bayes rule is

or if there’s always a conditional on :

Using

We get

Substituting this to Eq.(), and noticing that does not depend on the integrating variable ,

The first product

because

So we have

We need to show the integral on the right hand side to be exactly 1.

We start by writing out the terms explicitly and use ,

The only term that depends on is and it is integrated out to be 1. The integral becomes

Turning each fraction into a conditional probability

and so on. The integral becomes

Only the first term depends on and it is integrated out to be 1. After that, only the leading term depends on and it is integrated out to be 1 and so on. Therefore the whole integral is 1 resulting in

So the transition probability from Gibbs sampling is invariant. If we sample according to the Gibbs scheme, we can get unbiased samples of .